Where AI meets DEIB

According to Tech.co, since ChatGPT was released in late 2022, it’s been picked up by almost half of U.S. companies, and 93% of these firms are looking to expand its use. But what are ChatGPT's implications regarding Diversity, Equity, Inclusion, and Belonging (DEIB)?

From helpful DEIB use cases to, conversely, the biases that AI can carry, we’ve put together this timely guide to help you understand how innovators are approaching ChatGPT and other AI tools today. Browse key statistics, trends, impacts, and opportunities for AI implementation from PowerToFly thought leaders. With plenty of positive implications in the workplace, let’s explore what’s working for business leaders in the AI arena with a special focus on the possibilities — and challenges — that this fast-advancing technology brings to DEIB.

Contents

What is ChatGPT? AI tools explained

Artificial intelligence definitions & acronyms

Bots: Bots are software “robots” that handle business process automation. They’re typically used in specific processes within a work management software platform. Customer service chatbots are one example of bot deployment.

Generative AI: Generative AI is artificial intelligence that generates content. This includes chat bots that respond with original content vs. pre-programmed or canned answers. This content may be fully original or it may be curated and repurposed from multiple available sources.

RPA: Robotic process automation (RPA) is the catch-all term for the work that bots do. RPA bots make up the digital workforce of AI by making yes/no decisions and following simple directions based on assigned inputs.

BPA: Business process automation refers to the digitization of business processes, including assignment, submission, evaluation, and approval, within a centralized information management platform. Popular platforms include Oracle and the open-source platform Alfresco.

LLM: A large language model (LLM) is a language-producing tool backed by the ensemble learning method. This technique combines multiple machine learning algorithms to accurately predict applicable responses. ChatGPT, Bloom, and PaLM are all considered technology backed by LLMs.

AI: Artificial intelligence is an area of computer science that studies and develops the ability of machines to problem solve. AI is anything that enables computers to function in ways that resemble cognitive human intelligence. When machines handle strategy development, human interaction, and autonomous activity without human aid, it is generally considered AI.

ML: Machine learning (ML) is a subset of AI that currently includes basic automation abilities and straightforward commands. An example of common ML technology in enterprise systems would be the use of optical character recognition (OCR) for data intake and digital organization. Efficiency-focused automation capabilities that don’t require predictions, environmental perceptions, or human interaction fall under the ML umbrella.

Meet the AI-driven diversity recruiting platform.

Easily find and engage diverse talent with PowerPro.

ChatGPT

ChatGPT is an artificial intelligence chatbot developed by the research lab OpenAI. ChatGPT was launched in November 2022 to deliver “cutting-edge language (not just chat!) and speech-to-text capabilities.” Generative pretrained transformer, or GPT, refers to models and related technologies that produce generative AI responses.

Essentially, ChatGPT is an LLM using AI to generate content. It is unique in its accessibility. The nature of handling human feedback via written prompts makes it accessible by users who are less technologically inclined. Input parameters can be described and revised in everyday language in a back-and-forth chat format.

To use ChatGPT, users need only to create an OpenAI account using their email, birthdate, and phone number.

Admittedly, OpenAI states that ChatGPT has limitations. “The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI.” In fact, this quirk has been the most obvious way ChatGPT content has been recognized when used (unethically) in academia.

93%

of the firms that picked up ChatGPT are looking to expand its use.

100 mil

ChatGPT users within its first two months.

Pricing of ChatGPT

During its research preview, the use of ChatGPT was free. Now, there is tiered pricing. Basic ChatGPT remains free. ChatGPT Plus has a monthly subscription fee of USD $20. ChatGPT Pro is in its testing phase but will presumably cost more. Motivation to pay for access is based on availability (basic users sometimes receive an “at capacity” message during times of high demand) and speed in response to prompts.

First used as a standalone platform, OpenAI released integrations and “whisper” versions via application programming interface (API) in March of 2023.

The state of AI adoption: An overview

Now that we’ve clarified the top-line theoretical capabilities of AI technology, let’s see what role AI is playing in current global enterprise.

AI corporate use in general

Early implementation of AI solutions began with veteran software consulting firms in the tech industry working on heritage enterprise software solutions.

After that, we saw the rise of the digital transformation industry. This describes a trend towards significant demand for software consultants to implement automation at various work process levels. RPA developer Automation Anywhere, headquartered in San Jose, California, launched the first bot store in March 2018.

Widespread use of business process automation (BPA) grew in industries and markets regulated by the International Organization for Standardization (ISO standards). Software as a service (SaaS) with cloud-based hosting also became popular and revolutionized accessibility to digitization for small and medium-sized organizations. APIs allowed for integration of unique tools without excessive tech or budget requirements.

Deloitte, McKinsey, and other major companies have now adopted terminology that refers to the current state of digitization as Industry 4.0, describing maximum digitization of the manufacturing sector (along with other industries). Industry 4.0, or this fourth industrial revolution (4IR), is applicable to companies of all sizes.

ChatGPT corporate use: Specifics

According to Tech.co, here are 10 ways ChatGPT has been implemented by companies thus far.

- Customer service — chatbots manage inquiries and track customer data simultaneously.

- External communications — to streamline content production for social media or blogs.

- Coding — to code logic, syntax errors, and change from one coding language to another.

- Personal assistance — administrative tasks, including data entry and email management.

- Email writing — personalized cold emails and sales cadences.

- Copywriting — SEO blogging, often without transparency about use of ChatGPT.

- Time management — scheduling and prioritizing tasks.

- Presentation design — original PowerPoint or Slides presentations.

- Keyword research — link building efforts and populating content calendars.

- Meeting management — transcripts of minutes and summarizing meeting notes.

Insight from PowerToFly's Executive Forum

On May 12th, 2023, PowerToFly held an executive forum on the intersection of ChatGPT and DEIB. Within that dialogue, the executives who joined us shared they’re not yet tasked with implementing ChatGPT as a tool, nor has it been planned for company-wide use at any of their organizations. Still, as individuals in charge of innovative projects, all were interested in the tool and its potential benefits. Some used it unofficially and/or on internal projects. Tasks mentioned by participants included:

- Scraping SERPs — creating a defacto portal to find curated search engine results in an efficient manner.

- Internal communications — using ChatGPT as a “copilot” to create internal content, with additional editing and research to polish the final content.

- Recruiting — drafting rejection emails with a professional standard of language.

- Coding — on a data analysis team, opening up the possibility of a new job role as a Prompt Expert.

AI implementation: The philosophical question

More than anything, the executive forum brought forth comments and questions by participants looking to address the philosophical and ethical discussions surrounding the use of ChatGPT in the workplace. This discussion centered around whether AI eliminates bias or perpetuates inequality. As a tool, arguably, ChatGPT can do both — it all depends on how it’s used.

ChatGPT is a technology that has been publicly criticized by both sides of the political spectrum. The forum’s subject matter expert, Tomas Chamorro-Premuzic — Chief Innovation Officer at ManPowerGroup and author of I, Human: AI, Automation, and the Quest to Reclaim What Makes Us Unique — pointed out that in the U.S., liberals have called out its sexist and racist tendencies. Meanwhile conservative celebrities like Elon Musk have attacked the chatbot for its seemingly “woke” responses.

Does ChatGPT eliminate bias or perpetuate inequality? Let’s look at evidence for both arguments.

Eliminating bias with ChatGPT

DEIB done right will ensure that company processes and materials are as unbiased as possible.

- Blind evaluations. Job application reviews are typically quite subjective. Though you may tell a human recruiter or application reviewer to ignore a number of dynamic parameters, those data pieces can’t be erased. Gender, ethnicity (as deciphered by names), and well-reputed universities listed on a resume will likely remain in a person’s thoughts. Meanwhile, tell a machine to ignore any field, and it does.

- Revealing unintentional bias. Using ChatGPT to write or evaluate written materials has the ability to scrub unconscious bias from company processes. ChatGPT can be prompted to re-write job descriptions without mentioning key language, making them more inclusive, as well as to reveal bias in things like training materials.

- Identifying the status quo. ChatGPT can be used as an objective observer. To an extent, it can mitigate bias by balancing out the human element in any task. When prompted to, it can make us more aware of our existing biases.

Perpetuating inequality with ChatGPT

DEIB best practices remind us over and over that in order to make progress towards equity, we must remove the historical biases that plague our societies and work culture. In many ways, ChatGPT doesn’t exist outside of those biases – unchecked, it will regularly reflect the harmful status quo that exists in the body of material in any company and on the internet at large.

- Sexism. ChatGPT produces sexist responses. One response insisting that doctors are male and nurses are female went viral.

- Reinforcing the gender binary. ChatGPT produced a response where it not only assumed a lawyer to be male and a paralegal to be female, but that a pregnant person must be a woman. The technology does not recognize the possibility of pregnancy for gender nonbinary people, trans men, or others. In fact, ChatGPT responded by saying “pregnancy is not possible for men.” This, despite a trans man making international headlines for giving birth in 2008, a scenario which has since become much more commonplace.

- Job elimination. Though terms like “digital workforce” are joining the lexicon, bots are not people. A bot works for free without the need for compensation, rest, or just treatment. The use of AI tools improves efficiency and revenue by eliminating work previously done by humans.

Forget manual work and embrace efficiency with

PowerToFly's all-in-one diversity recruiting software.

Two sides of the same coin

The benefits and drawbacks of ChatGPT and other AI tools are two sides of the same coin. AI applied to business is an obvious way to improve the bottom line, but it’s also directly linked to layoffs and job elimination. According to a LinkedIn survey:

- 25% of companies using ChatGPT have saved over $75,000 already.

- 48% of companies using ChatGPT say it has already replaced workers.

- 63% of business leaders believe ChatGPT will lead to more layoffs within five years.

When applied to DEIB efforts, AI can be an effective and still human-centric way to address historical iniquities and remove potential bias without human contamination. The same LinkedIn survey reported:

- Despite concerns about job displacement, 90% of business leaders believe that experience with ChatGPT is a beneficial skill for job seekers.

- 49% of companies currently use ChatGPT, and an additional 30% plan to use it soon.

- 93% of companies using ChatGPT plan to expand its use.

- 85% of companies planning to use ChatGPT will start doing so within the next six months.

Benefits of AI implementation

The conversation surrounding AI is often framed as benefits vs. drawbacks. Let’s look at why everyone is excited about this technology.

Working faster. One benefit of all artificial intelligence in business is that it reduces the workload for the human workforce. While a human sorts one file at a time, bots give digital document management systems the ability to sort files by the tens of thousands. Work simply gets done faster.

Improving employee experience. Basic AI tech eliminates a monotonous slice of repetitive work, like data entry, from the human workload. Employees will enjoy their job more, be happy at work, and stay motivated. This is good for morale and reduces redundancy.

Working smarter. The guesswork of data collection is gone. Integrated AI can assess business intelligence with precision. Computers make it easy to record every small detail of activity. Using centralized systems, we can record every system-based interaction, from the CEO to the entry-level worker.

With accurate and transparent information, companies using AI also work smarter using realtime analysis. What used to be done by human business analysts can now be assessed digitally. As the data piles up, bots can process it to provide a rich output regarding trends, mistakes, opportunities for improvement, and even predictions about the future. Executives can use this “big data” to optimize the company’s opportunities for revenue — and its opportunities within DEIB. When you consider that just 20% of HR professionals say their organization establishes and measures DEIB analytics to a high or very high degree, the potential for improvement is considerable.

Drawbacks of AI implementation

What are the drawbacks of embracing AI for everyday tasks?

It’s ethically murky. The general public is still unsure about the ethics of AI. The world’s first AI supermodel Shudu is a Black woman, but her image is owned by a White creator. Besides public controversy, the optics and the ethics of scenarios like this go in direct opposition to DEIB values. And the risk of AI perpetuating stereotypes is also a real danger. Consider: A scientist at the University of California, Berkeley, got ChatGPT to say that only White or Asian men make good scientists. ChatGPT culls language and information from the internet, and the internet is a plenty-biased place. Not only that, but ChatGPT has been known to put out what insiders call “AI hallucinations,” or AI-invented information. Without human oversight and guardrails, ChatGPT could spread and reinforce stereotypes and mistruths about marginalized groups.

There’s liability. Due to its novelty and unanswered questions about the ethics of ChatGPT use, implementing ChatGPT or other AI tools may leave your company open to liability. This is a risk management issue. It’s especially important for any company managing sensitive customer data and working in a highly regulated industry. There’s a danger factor to too early adoption. There’s also a potential for public scandal when implementing a tool that is currently buzzing in multiple industries and international news cycles.

It could be a race to the bottom. Using a tool like ChatGPT to write external (and internal) communications may represent a race to the bottom in terms of generic content and branding. Take writing your company’s DEIB statement for example. Best practices clearly state that a statement proclaiming your company’s values surrounding DEIB should be unique, customized to your mission, and specific in reference to your efforts and future vision. AI copy has the potential to dilute the human element behind a company’s vision in exchange for a generic, high-performance statement. And as we’ll see in the following misstep from Vanderbilt University’s DEIB office, insincere DEIB messaging, or even the perception of insincerity in this messaging, can be seriously harmful.

Real AI use cases in the news

Current news revolving around AI technology reflects the what-ifs, unknowns, skepticism, and incredible potential that surround AI at the moment. It also reflects some of the DEIB dilemmas that the adoption of AI creates. Let’s take a look at some recent examples.

WAG strike

The Writers Guild of America is on strike, sending a lot of TV shows and movies into hiatus. Primary grievances revolve around compensation and working conditions — and there’s an undercurrent related to ChatGPT.

While ChatGPT isn’t a particularly good nor funny writer at the moment, writers are concerned they could become mere script editors for AI. AI could come up with a basic story with a few inputs (Christmas rom com) and a writer would be hired for less pay to touch it up. AI has increasingly become a regular part of Hollywood. It’s the reason a young Carrie Fisher could reprise her role in Star Wars’ Rogue One, or how actors can be replaced with convincing CGI characters.

Workday lawsuit

Workday, an enterprise management cloud, is in the midst of a lawsuit for discrimination in its AI technology. At the center of the case are the experiences of Derke Mobley, a Black man over 40 who is neurodiverse. Mobley applied to 80-100 jobs in which Workday was the software and he was rejected from all of them. Workday insists that the lawsuit doesn’t hold water and is “committed to trustworthy AI.”

This is not unlike Amazon’s situation in 2018 when they realized their AI recruitment algorithm didn’t like women. Amazon scrapped the project and insisted it never used the software, but it brought up questions about the reliability of AI. Still, AI supporters are quick to point out that algorithms merely reflect patterns of data we give it. It isn’t necessarily the AI that’s biased, but ourselves.

Vanderbilt’s school shooting gaffe

Vanderbilt University’s DEI department came under scrutiny when it used ChatGPT to write a mass email to students following a shooting at another university. After reminding students to take care of each other, the email concluded with the asterisked line: “Paraphrase from OpenAI’s ChatGPT AI language model, personal communication, February 15, 2023.”

Accusations of the university being tone-deaf at best flew. Shaun Harper, a provost professor at the University of Southern California, summarized the relevance of a misstep like this to organizations well in a piece for Forbes: “DEI communications from many CSuites are already plagued with authenticity problems. Statements from the CEO about the tragic police shootings of unarmed Black Americans, as well as the seemingly obligatory history/heritage month emails to celebrate LGBTQIA+ Pride and various ethnic groups each year, are typically written by someone else. Employees can sometimes detect the artificiality. Ghostwriting therefore undermines executive integrity on DEI. ChatGPT will exacerbate this.”

Concerns for companies to account for

Are people into ChatGPT and related content producers because AI is a shiny new tech toy, or because it actually serves a need in the industry? Is it a business tool or just a financial product? The answer depends on how AI tools are used. The Economist advises business leaders to “worry wisely.” Since AI tech use is increasing, it’s important that business leaders know specific elements to be concerned about for risk reduction.

Data security

Make sure you know what’s happening to the information you’re feeding your AI model. Imagine you use AI to screen internal candidates for promotions. That means you’re providing training, performance, salary, and other sensitive information to the AI model.

Be especially protective of data that is highly regulated, such as private data and sensitive financial or medical information. Questions you need to ask and answer are:

- Where does that data go? Can you delete it?

- Is your data stored? For how long?

- Is the data used by the AI provider to train other AI models?

- Is the data shared with partners? Is it sold?

- Who has access to this data on the AI provider’s end?

Plagiarism

More visible in academia, a unique gray area of AI plagiarism comes up with ChatGPT because of how it sources language. Take poetry for example. Copying a famous poem line-by-line is easily recognizable. Creating a poem by piecing together lines from five different poems may not be detectable by the average reader, but it will still ping with anti-plagiarism software. An AI that learns language and style based on a prompt and then generates a piece based on tens of thousands of sources will be virtually undetectable. Though not original, it is new. Does it fall under Russian playwright Anton Chekhov’s famous reference that “there is nothing new in art except talent”? Or is it merely plagiarism of many authors at once? Remember, ChatGPT does not cite references or sources.

Blurring of employment norms

VICE reports that an unknown number of individuals have leveraged the abilities of ChatGPT to take on multiple jobs (2-4) at once while their employers are none the wiser. These hustlers call themselves “overemployed” and admit that ChatGPT does about 80% of certain jobs. Though a job description for an employee or contract worker may not state that the candidate can’t hold more than one full-time position, this assumption must now be made explicit.

Power your recruitment efforts with our proprietary diversity data and AI-driven candidate search.

What jobs are affected?

The types of jobs affected by AI may be part of this negative perception. DEIB efforts are aimed at improving company diversity and building brands where people of all backgrounds and talents are valued.

Since we know that diversity is highest at the lowest level of a company, jobs eliminated by AI may cause a drop in diversity. Niche contractors hired to fill in DEIB knowledge gaps — say, copywriters specializing in an LGBTQIA+ perspective — will be those most affected by ChatGPT taking over copywriting tasks in a company.

The customer service field is another area where DEIB initiatives flourish. Today, 69% of reps are women and 44% are BIPOC. The ability to problem-solve in different languages and communication styles is a win for both customer experience and business outcomes.

The most effective customer service representatives are those who bring cultural relevance to their work. Losing out on the advantages of staffing a diverse customer service team is another risk of ChatGPT.

Other DEIB-specific concerns

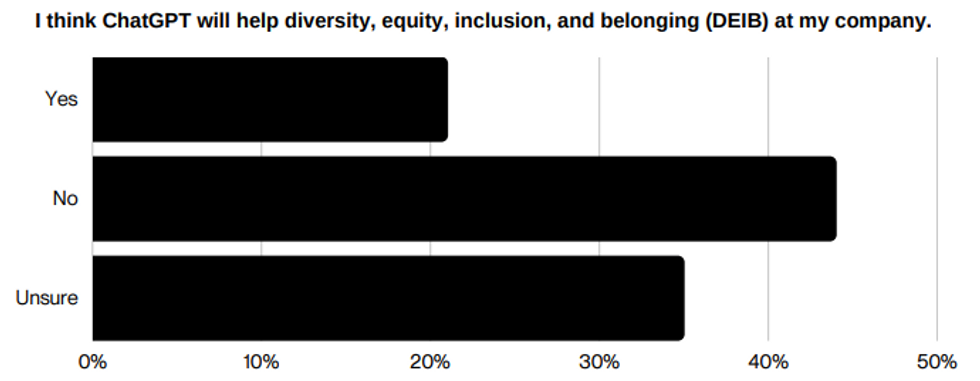

In May 2023, PowerToFly polled hundreds of professionals on LinkedIn about how they perceive the impact of this new technology for DEIB. Forty-four percent of respondents think ChatGPT will have a positive influence on DEIB at their company. Twenty-one percent disagree, and the remaining 35% are unsure.

The fact a clear majority of respondents (79%) either believed ChatGPT would indeed hurt DEIB or weren’t sure whether it would isn’t surprising given the real risks this technology carries. We’ve covered several in this report already. Here are three more DEIB-specific concerns that company leaders should pay attention to when implementing AI for business tasks.

Detecting AI bias. Organizations need to be able to explain the results of any AI models that they’re using. That means:

- Understanding exactly how the AI works.

- Developers should explain what technology and data sets are used to construct the model.

- Ensuring that data sets being fed to the AI are free from bias.

Increasingly user-friendly AI like ChatGPT means that organizations may begin using the technology without really understanding how the AI works. Consulting firms like Deloitte offer AI bias audits.

Digital divide. The gap between employees who are tech-savvy and those who are not tends to fall along the same lines that cause marginalization. Income level, place of origin, and age are factors in whether a person has technology training. When addressing how to write inclusive job descriptions, two best practices are sticking to essential skills and remaining committed to DEIB. Be sure that familiarity with AI and ChatGPT prompt writing don’t creep onto job requirement lists where they don’t belong.

Cultural misappropriation. AI imaging appears to be heavy on cultural mishaps. Since many AI tools are developed in the United States, they tend towards U.S.-based cultural references. An article in Medium reveals that AI puts a giant USA-style smile on the faces of people who do not often smile in this way. Smiling for portraits is inauthentic to many cultures such as Indigenous people, central and eastern Europeans, and some Asian and Latin American cultures. The effect of seeing these images is harmful because it erodes authentic culture.

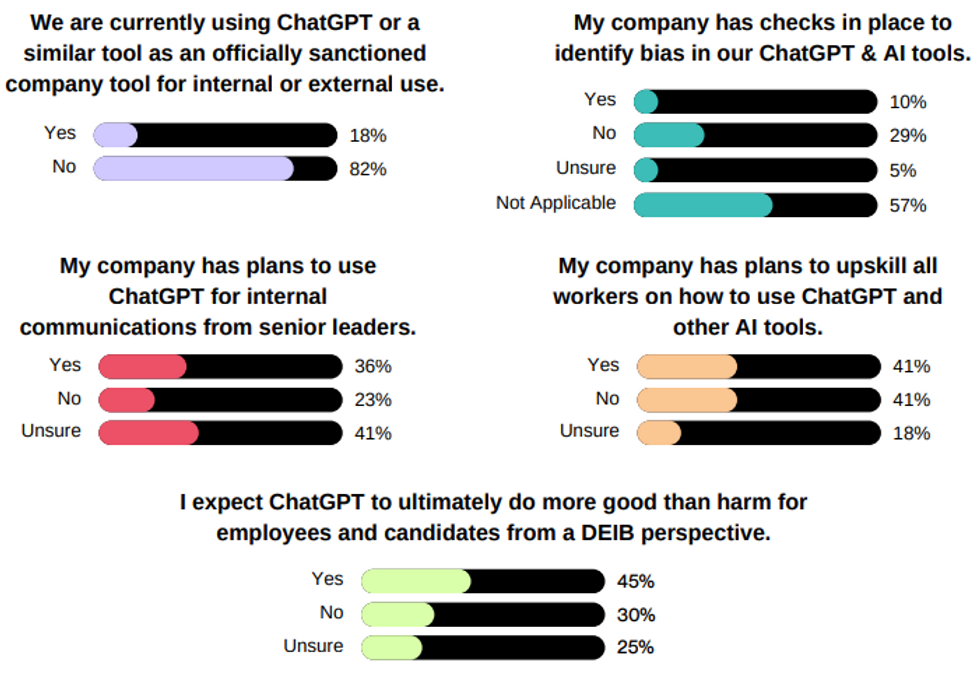

ChatGPT & DEIB poll results

A survey by Fishbowl of nearly 12,000 workers found that 43% of them were using ChatGPT or other AI tools at work. Yet, 68% of those using these AI tools are not disclosing their usage to their boss. How does that compare to PowerToFly’s partners?

During our Executive Forum, we polled participants about their ChatGPT and AI usage behavior and DEIB.

Unsure if your DEIB efforts are working? We can help!

Diversity audit, strategy, and training tailored to your needs.

3 key recommendations for AI usage

We should be careful not to judge new technology too quickly. As Chamorro-Premuzic pointed out in the forum, more than 42,000 people die each year in car accidents in the U.S. In four months of 2022, 11 people were killed in accidents involving self-driving cars. Should AI cars be judged as killers? Or do humans cause more harm than AI in certain cases?

Business leaders can see clear benefits to incorporating AI tools. Keeping an analytical eye towards positive and negative impacts, there are intelligent ways to implement AI and manage risk at the same time.

1. Implement AI tools for efficiency tasks

Scraping. Not everyone is an expert on Boolean Operators and effective search. Machines can “read” and comb through information much faster than humans. SERP and other information scraping is an effective use of AI for research and reading tasks.

- Generate Boolean search strings.

- Save and reuse search parameters.

- Optical character recognition (OCR).

- Finding specific language or keywords.

Coding. AI code tools can help programmers create highquality code, generate code snippets, or translate from one programming language to another. AI tools are fast becoming standard practice among programmers. Some of the most popular AI code generators are:

- Codex.

- Copilot.

- ChatGPT.

Business Process Automation (BPA). Technology-based AI that builds project management into your work platform cuts down on some of the more boring management tasks in any job. It’s no wonder that machine learning and AI tools have been applied to BPA for years.

- Assigning tasks.

- Managing progress.

- Revisions and follow-ups.

- Approvals.

- External audits.

2. Retain human writers for human content

Content expert Brooklin Nash demonstrates that there is a glaring difference between ChatGPTgenerated content versus using a human writer.

Never forget your human audience. Remember that your readers are people and not machines. From a mission statement to an SEO blog post, the content that performs best is the content that people find educational and entertaining. To avoid alienating your target audience, be careful not to strip the human element from your brand — especially when speaking to DEIB topics.

Edit, edit, edit. If you use AI to produce content and copy as a starting point, be sure to have the budget and timeline to cover a hefty editing process. Buzzfeed already made headlines for not following this practice, and the brand has suffered for it.

Stay honest with DEIB. In DEIB circles, we are familiar with the phrase “nothing for us without us.” When your content or copy references any issue related to diversity, equity, inclusion, or belonging, you must make sure it is written by a person that can properly represent those interests. Looking for content about Juneteenth? Don’t run to ChatGPT. Hire a Black American writer. Give that writer the power of place to originate the message. Don’t limit diversity to a single revision or editing pass.

3. Increase transparency surrounding potential AI use

Disclosure of AI usage. Have an internal policy regarding disclosure of AI usage. If employees are using AI, their bosses and colleagues should be aware. Have a policy regarding AI usage for your third party supply chain and contractors and include this in your written contracts to manage risk. If suppliers and contractors use AI, you should be aware of that and require its disclosure to you.

Educate employees about AI algorithms. Explainable AI is transparent AI. When we don’t understand what AI is, our imagination can get carried away. Educate employees about basic AI functions and any specific AI technology they are using. When employees understand how the AI works, if it makes a mistake, they can jump in with human judgment. Back in the ‘80s, computer classes still reviewed binary code. Foundational knowledge of AI is critical for its transparent usage.

Single role employment. Avoid “overemployment,” which can lead to dependency on AI tools and single points of failure. If you expect an employee or full-time contractor to hold only one employment role for the duration of their hire, state this in both the job description and the work contract.

Recommended Resources

Participants shared these recommendations for further learning and exploration:

- EqualAI - a nonprofit organization, as well as a movement, focused on reducing unconscious bias in the development and use of artificial intelligence.

- The U.S. Equal Employment and Opportunity Commission (EEOC)’s AI Fairness Initiative.

- PowerToFly’s ChatGPT and the Future of Work summit.

- I, Human: AI, Automation, and the Quest to Reclaim What Makes Us Unique by Tomas Chamorro-Premuzic.

Diversity recruiting, made simple.

PowerPro is your AI-driven, SaaS solution to growing diverse teams.