While AI might feel like an overused buzzword, this blanket term covers a wide range of exciting technologies – but how do you separate the good from the bad?

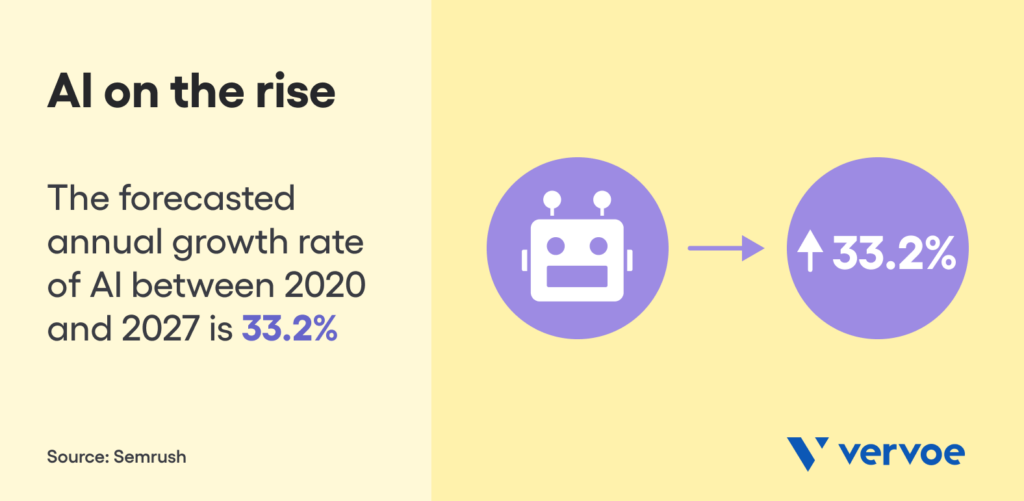

While it’s no secret that the market size of AI is rapidly gaining momentum, numbers don’t lie. As the world scrambles to keep up with advancements in technology, according to Semrush, the forecasted annual growth rate of AI between 2020 and 2027 is 33.2%.

Despite this, it’s crucial to remember that not all AI was created equally. While most AI systems are designed to be “decision tree” type formats that don’t learn on their own and follow sets of predetermined rules, machine learning is arguably the future of AI.

Artificial intelligence that incorporates machine learning works by combining large amounts of data with fast, iterative processing and intelligent algorithms, allowing the software to learn automatically from patterns or features in the data. Fundamentally, the quality of an AI system is only as good as the data it is fed – which is of particular importance when deployed during recruitment.

An introduction to the fundamentals of AI

Artificial intelligence is intelligence demonstrated by machines, as opposed to the natural intelligence displayed by animals including humans. Typically rolled out to complete tasks that are usually actioned by a human, AI technology is already powering many processes in our day-to-day lives — even if we don’t realize it.

In fact, common examples of artificial intelligence at work today include robots used for manufacturing, self-driving cars, automated financial investing, and even virtual assistants commonly deployed as a substitute for human customer service agents.

In the world of business, industry-specific AI that understands text is even helping hiring managers track down top candidates, and determine how well they will potentially perform on the job. When appropriately used, AI ultimately helps to drive down the cost and time it takes to perform a task.

As time goes on, the capabilities of artificial intelligence continue to advance thanks to machine learning, which is often defined by an AI’s ability to educate itself without the help of a human or a new data set. AI models are built to mimic reality, but the closer the model can get to the complexity and interconnectedness of reality, the better that model is. While this factor is often what defines good AI, the reality is that the quality of any AI system is often determined by the data sets that it is originally fed by a human, and the quality of the known outcomes of those very same data sets.

Although this advancement has proven to be revolutionary in many sectors, an understanding of AI limitations is starting to sink in — particularly when it comes to ethics and potential bias.

The conversation about ethical artificial intelligence in hiring

The game-changing promise of artificial intelligence is its ability to do things like improve efficiency, bring down costs, and accelerate research and development. The problem that governments and businesses face is doing so in a manner that is considered ethical, and unbiased.

Private companies use AI software to make determinations about health and medicine, employment, creditworthiness, and even criminal justice without having to answer for how they’re ensuring that programs aren’t encoded, consciously or unconsciously, with structural biases.

While AI that can read and understand is deployed in a wide variety of settings, concern is mounting over using machine learning for recruitment. If left to its own devices and not carefully monitored, artificial intelligence may inadvertently be assessing, ranking and recommending candidates – or overlooking others – through a bias algorithm.

Why concern is growing over AI bias and how good AI overcomes it

When ranking potential employees, an AI-based recruitment platform may unwittingly discriminate based on gender, race, or age. The tools also might not improve diversity in a business, as they are based on past company data and thus may promote candidates who are most similar to current employees.

Fundamentally, addressing ethical concerns and removing bias with an AI hiring algorithm is inextricably linked to the data the system is fed. One of the most important things — if not, the most important thing — with machine learning done at Vervoe is making sure that you are only feeding data that makes sense for the computer to learn from, or what we refer to as “clean” data. This way, the machine never has access to information that could potentially be misused to form bias in the first place.

For those unfamiliar with the term, data cleaning is the process of fixing or removing incorrect, corrupted, incorrectly formatted, duplicate, or incomplete data within a dataset. Failure to adhere to these principles can result in an AI system grading things like whether a candidate is wearing glasses, a scarf, or even mentions the word “children” in their application. Machine learning can be better than humans because you are removing these subconscious biases, and that’s what good AI is all about.

In fact, an example of a bad AI hiring algorithm was recently identified at one of the world’s biggest corporations. In 2018, Amazon announced that they were abandoning the development of an AI-powered recruitment engine because it identified proxies for gender on candidates’ CVs, and used them to discriminate against female applicants.

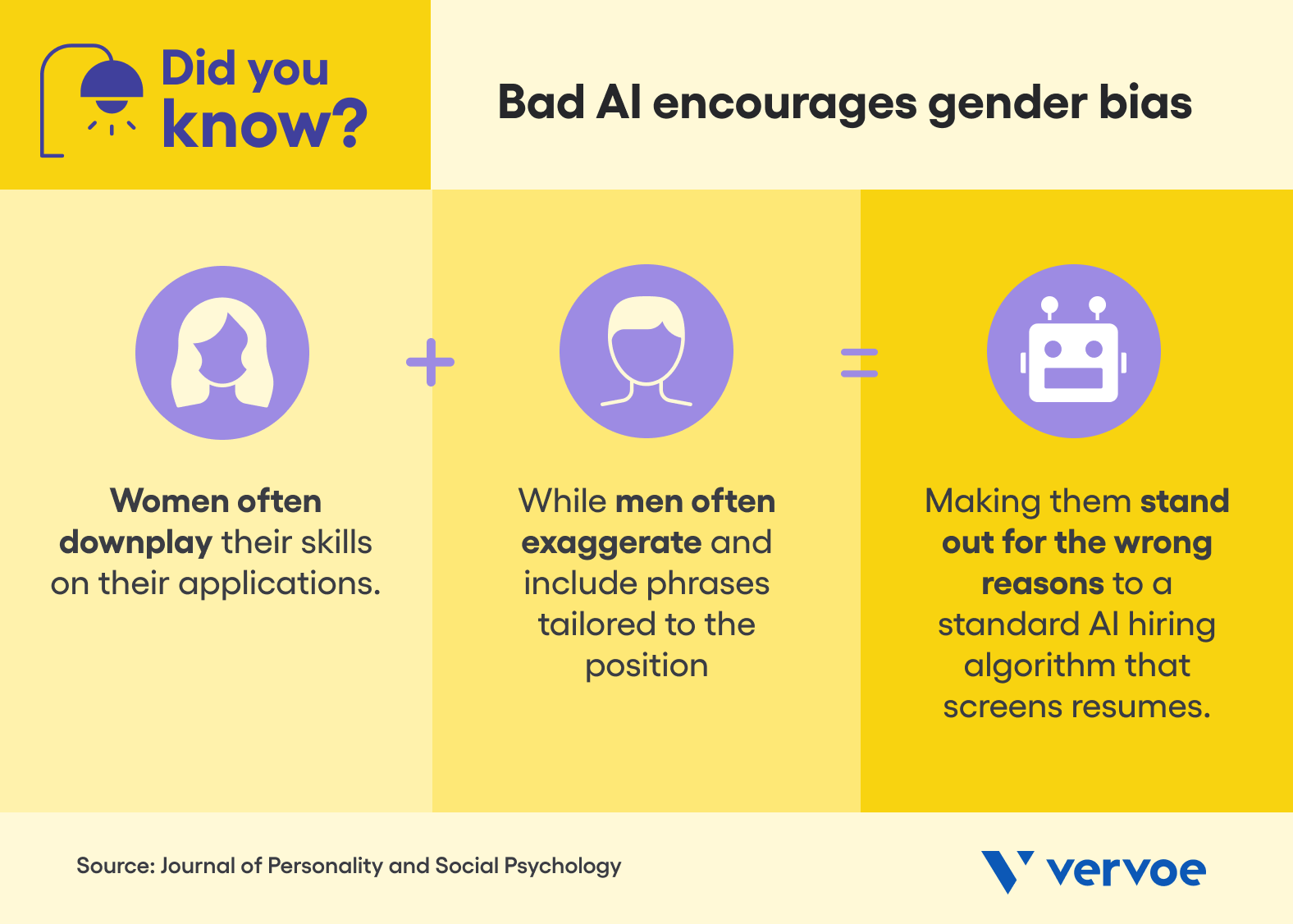

In addition, research from the Journal of Personality and Social Psychology suggests that women often downplay their skills on their applications, while men often exaggerate and include phrases tailored to the position — making them stand out for the wrong reasons to a standard AI hiring algorithm that screens resumes. What’s more, is that candidates may also unconsciously use gendered language by including words that are associated with gender stereotypes.

As an example, men are more likely to use assertive words like “leader,” “competitive,” and “dominant,” whereas women may use words like “support,” “understand,” and “interpersonal.” This can put female applicants at a disadvantage by replicating the gendered ways in which hiring managers judge applicants. When the algorithm scans their resumes compared with those of their male counterparts, it may read the men as more qualified based on the active language they’re using.

For a growing number of businesses around the globe, the solution is not to use an AI-based job portal, but to instead harness the technology in a later stage of the hiring process such as using job simulations. By assessing an applicant’s ability to perform the role through a skills assessment, AI that can read and understand focuses on the work — and not the person.

How to separate good AI from bad AI

If we are to believe how AI technology is depicted in films such as The Matrix or Ex Machina, or even the opinion of Elon Musk, artificial intelligence should be treated with caution. AI is capable of learning over time with pre-fed data and past experiences, but cannot be creative in its approach and is limited with its innovation.

As such, any conversational AI software should be consistently monitored and adjusted. Given the fact that technology is constantly evolving, AI systems should never be approached with a ‘set and forget’ mindset. In addition, good AI is never designed to replace a human, but to empower them with greater efficiency.

For businesses looking to implement an AI-based recruitment platform, Pramudi Suraweera – Principal Data Scientist at SEEK – has a wealth of experience with working with AI that can read and understand as a part of his role. According to Pramundi, the key to partnering with the right AI software development company is to do your research on what systems are actually capable of what you’re trying to accomplish.

“Computing is cheap, but it also creates a double-edged sword because you can have solutions that haven’t thought through the impacts. They can sound great, but they can end up being biased towards actually solving the problem,” said Pramundi.

“Explore options that reduce time in your day-to-day job instead of making you redundant. In addition, don’t be afraid to ask questions about how the potential company uses data for their AI-powered product, and what their policy is on its responsible use.”

In simple terms, we should think of AI as a spectrum. At one end lies online self-service menus found via the likes of your phone service provider, while at the other, there are self-driving cars. The intelligence of an AI system — or its power — lies in the data it is fed by humans, and what it does with that data.

Like it or not, the global AI market will reach a size of half a trillion US dollars in 2023. About 28% of people claim to fully trust AI, while 42% claim to generally accept it. A whopping 83% of companies consider using AI in their strategy to be a high priority, and this is reflected by the number of businesses using artificial intelligence, which grew by 300% in 5 years. While recruitment is believed to be one of the leading industries that have adopted AI technology with open arms, using it responsibly is a conversation that will remain ongoing.

Meet the market leaders in machine learning for recruitment

Vervoe is an end-to-end solution that is proudly revolutionizing the hiring process. By empowering businesses to create completely unique assessments that are tailored to suit the specific requirements of a role, Vervoe predicts performance using job simulations that showcase the talent of every candidate.

Filling a position is a costly, time-consuming, and often stressful process when using traditional hiring practices. To see people do the job before they get the job, book a demo today and let our experienced team run you through Vervoe’s full range of ready-made and tailored solutions.